Exploring Continuous Efforts of Kremlin’s Bots Campaigns

The world is well aware of the information campaigns in Russia that have been used to influence public opinions and discussions in a pro-government direction. Several investigations have proven that these campaigns originated from the media empire of the late Wagner Group chief, Evgeniy Prigozhin, and were colloquially known as the “Troll Factory.” However, what has happened since Prigozhin’s death? How has it affected the online campaigns, companies, and initiatives that are reputed to have influenced the American elections in 2016?

Prigozhin’s death has led to significant changes in the structure and operations of online influence campaigns. His passing has created a power vacuum, resulting in shifts within the companies and media outlets he controlled. Without Prigozhin’s direct leadership, these operations have experienced organizational disruptions and a possible decline in their coordinated efforts, even though the campaigns remain active on the internet.

Despite these setbacks, the influence campaigns continue, albeit with potentially reduced effectiveness. The Russian state and associated entities have maintained their strategic goals of manipulating public discourse, but the loss of a key figure like Prigozhin may have diluted their capacity to execute these operations with the same level of impact and cohesion as before.

WNM presents findings on the ongoing campaigns conducted by the same entities responsible for previous Russian online influence operations. These users, often referred to as “bots” or “kremlebots” (Russian slang for Kremlin’s bots), operate within organized companies, offices, or departments consisting of paid human users and sometimes automated bot campaigns.

Nowadays, with improved bot defenses on media platforms, automated campaigns are encountered much less frequently. Instead, individuals work in an organized and consistent manner to disseminate specific agendas. Although these “bots” are human users, they are highly distinguishable by their use of the same messages and manner of speaking. They are prohibited from using certain vocabulary that could discredit the propaganda agendas.

The presence of “kremlebots” became widely noted starting in the 2010s, primarily appearing on Russia’s largest social network, VKontakte, and on LiveJournal, a popular Russian blogging platform. These platforms were significant not only for their widespread use but also because many Russian opposition figures, including Alexei Navalny, began their media careers there.

The “bots” used to build up the networks with cross-commenting and cross-replying, building the pro-government narratives and starting fights with the users of the opposite opinion. They also would utilize the international news events promoting the opinions or agendas that are beneficial for the pro-Russian side.

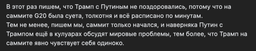

For example, according to one of the accounts, that claim to have worked for the “Troll Factory”, the task for the day was to write that Trump didn’t great Putin because “at the G20 summit there was bustle, jostling and everything was scheduled to the minute”, “Nevertheless, we write, the summit has just begun, and Putin and Trump will probably discuss world problems on the sidelines, especially since Trump clearly feels lonely at the summit.”

Screenshot from the “Kremlebot Confession” group on VKontakte revealing the propaganda agenda for the G20 summit in 2018. (Source: VK)

The Troll Factories have been reported to reside in Saint Petersburg, primarily in Olgino, a historic neighborhood of the city. This location was associated with the office of the Internet Research Agency, which paid people to post comments and maintain discussions online.

According to Google Trends in the last 12 months, the location’s traffic is still active:

Google Trends for “Навальный [Navalny]” shows the most traffic coming from Saint-Petersburg.

In the Saint-Petersburg and its suburbs, the most traffic is coming from Volodarskaya, Pargolovo and Olgino.

The density of web searches for “Надеждин [Nadezhdin]” (Boris Nadezhdin, a Russian opposition politician who tried to get his candidacy in the presidential elections in 2024) shows the same traffic locations: Volodarskaya, Olgino and Pargolovo.

Analysis of the behavior

Despite the 2016 accusations of Russian bot campaigns influencing international affairs, including the American elections, it appears that these efforts are now primarily focused within Russia, targeting the Russian opposition on YouTube.

A comparison of web searches for “Navalny” in Russian and English for 2016 and 2024 respectively shows that English-language inquiries surpass those from the most populated areas of Russia, including Saint Petersburg and Moscow in 2016. In 2024, the search dynamics for “Navalny” appear more natural, with the majority of searches in Russian and concentrated in Moscow and Saint Petersburg. (Source: Google Trends)

Our team has set the goal to analyze the “bots” behavior on YouTube, as the campaigns are already well-documented on VKontakte (VK), X, and other Russian-owned platforms.

YouTube remains a crucial source of information for the Russian population, despite the government’s persistent efforts to block it and simultaneously develop Russian-controlled alternative video streaming platforms and services. However, the platform lacks transparency in users’ profiles and connections. Users can create channels with only a username and the time of creation being visible. The ease of creating a Google account, required for a YouTube channel, allows “bots” to manage and manipulate multiple accounts and channels.

Additionally, YouTube lacks search functionality based on users’ interactions with videos, enabling bots or coordinated campaigns to attack several videos or channels massively without being noticed. This lack of transparency makes the discovery of these campaigns rather difficult. WNM examined some cases of platform manipulation in favor of pro-Russian consolidated propaganda efforts.

“Bots”‘ attack on Yulia Navalnaya

The YouTube channel “Navalny LIVE” posted a video titled “Speech by Yulia Navalnaya and FBK. Deutsche Welle Freedom of Expression Award” on June 6th. The comments under this video quickly became inundated with the same insulting rhetoric and repetitive narratives. These narratives mirrored those used by Russian propagandists aimed at discrediting Yulia Navalnaya and her message:

A comment saying:” What’s wrong with her speech? She mumbles something incomprehensibly, as if she’s drunk, or is she so worried that she couldn’t shake the regime and didn’t continue Lyokha’s [Nickname for Alexei] work, negating everything by going to the polls. And the fact that she turned out there… by the way, on this times there were not enough cases with “zelyonka” [Brilliant green solution, widely used in the former Soviet Union as an antiseptic, has recently been used in political attacks and during presidential elections to ruin ballots] either.”

A comment saying: “Somehow you couldn’t see any sincerity or sorrow in her words, and was she drunk or what? She mumbles, can’t even put together a couple of words properly, did she advertise there that she would sell the memoirs of her late husband? And behind the scenes, how they giggled and gave interviews. She plays lousy… She’s a terrible actress, just like the oppositionist.”

A comment saying: “what a pathetic woman… Just dancing on her husband’s grave… What kind of freedom of speech are we talking about? She has already shown herself in all her glory, when she walked out drunk at a concert in honor of her husband a couple of days ago.”

A comment saying: “We heard this speech at Navalny’s birthday party. She can’t put two words together, her tongue is tied. Apparently, Chichvarkin has already treated her to some wine, and maybe not just wine…”

All of these comments from different users are using the same words accusing Yulia Navalnaya, claiming she is “drunk,” “unable to put two words together,” and “benefiting from her husband’s death,” among other insults. Most of the accounts leaving these comments were created between late May and early June 2024.

The account of “Roman Konovalov” that left the first comment was created on the day the video was published. These accounts use fictional profile pictures and names often aiming to resemble common Russian names:

However, this account has “Maria Trujillo” in the username while having “Daria Filippova” as the name of the account.

“Daria Filippova” was also noticed leaving comments under other videos on Navalny channel, all of them were in favor of the Russian government and their agenda:

Comment saying: “Do you think these people are only those who came to watch the rally in 1918 in Moscow? Don’t you think it’s hypocritical to speak for the entire people of the Russian Federation? That is, those who support Putin are nonhumans? Are these your values in the West? There were elections, the results of which were recognized by many countries, including the USA! And the majority were for Putin, because only with him Russia prospers!”

One of the accounts leaving comments was set to belong to “Mikhail Chernov”:

Although it was using a picture from VK profile of a person named “Alexandr Moskovskiy”:

Attack on Navalny LIVE

The “bots” have been leaving comments under all of the videos on Navalny’s team channels, regardless of the video’s topic. These comments usually start with a brief introduction related to the video’s subject, followed by a connection to broader topics in Russian politics, such as the opposition, Navalny’s team, and the war with Ukraine. For example, a comment was left under a video where Navalny’s team reviewed the political statements of Artemii Lebedev, a Russian designer who works with government contracts famous for his explicit political statements.

The initial comment says: “So what’s wrong with Lebedev? After all, he is a real oppositionist who has done everything to ensure that the country develops – at least he proposed tax deductions for medical check-ups by sending such an appeal and proposal to the department. He also knows much more about Ukraine than anyone from the Anti-Corruption Foundation (FBK) because he worked with them, for which Zelensky seized his apartment in the center of Kyiv. And most importantly, he is ready to truly work for his country and for its benefit, rather than selling out to the Americans like the FBK and the others do.” The replying comment says: ” The Anti-Corruption Foundation (FBK) sees him as competition; they see that there are those who not only talk but also act while living in Russia.”

The account also was created on the date of the video publication

Besides Navalny’s channel, the campaigns also attacked the TV Rain channel, one of the largest independent news agencies that used to operate in Russia, as well as the prominent opposition blogger Maxim Katz.

User TroyCraw left comments under TV Rain’s video about the crimes of a soldier returned from the war in Ukraine, saying: “Typical squawking from the foreign agent garbage Rain (Dozhd) to discredit Russian servicemen.” The user also replied to another comment using similar rhetoric: “In the end, they themselves made up these wet stories to get bucks from the USA for discrediting the Russian army.”

The same user, TroyCraw, was also noticed in the comment section of a video by Maxim Katz. He replied to a comment about the skills that a president should possess, continuing his pattern of using rhetoric aimed at discrediting opposition voices and reinforcing pro-government narratives:

Translation of the dialogue: “@user-bk9kn7rm2n: Only a technical person can be president and in leadership positions. Everything really depends on technical specialists. @TroyCraw: President Putin has knowledge in all areas of the country’s life support. From military affairs to artificial intelligence.”

Troy Crawford is also an account created a week before the publications of the videos it commented on:

This report was based on several “bot” accounts that our team managed to find and track down. Their strategies primarily involve creating the impression of a large number of users supporting pro-government agendas. It is also noteworthy that comments by the “bots” usually receive around 60 likes with often no replies. This indicates that the “bots” are working together in networks, allowing them to create discussions with each other, reply to, and like each other’s comments. It was also noted that the “bots” are prompted by certain trigger words like “Ukraine,” “war,” “Putin,” “criminal,” and others.

This “bot” account reacts to comments containing phrases like “Putin-criminal” with statements claiming that if Putin were a criminal, Russia would be a totalitarian country rather than the democracy it is. The comment the “bot” reacts to lacks coherent text and makes no sense.

The translation of the initial comment by user @karlygashabisheva2403 is approximately as follows: “Why is Putin a criminal in collusion with my sister Dariga, so that she holds a monopoly in Kazakhstan and does not let me get close to my father Nursultan Nazarbayev, because the ransom for Great Britain will come to me, and I will become the richest woman on earth. But Putin the criminal miscalculated my status, I am not only the Queen of Sakas but also a global commission.”

This indicates that the “bots” are most likely operating based on keywords, often lacking the time or capability to read and comprehend the ongoing discussions and statements made by users. This pattern demonstrates the integrated efforts already in place within Russia, showcasing a sophisticated approach to influence operations. It also highlights the potential risk of such campaigns being replicated in other parts of the world by different actors and entities.

The WNM team is leveraging its expertise in network analysis and geopolitical knowledge to research and track down these threatening social media campaigns. By identifying and analyzing the patterns and behaviors of these “bots”, the team aims to uncover the broader strategies and intentions behind these coordinated efforts. This work is crucial in understanding the scope and impact of digital influence operations, not just within Russia, but globally, as similar tactics could be deployed by other state and non-state actors to manipulate public opinion and disrupt democratic processes.

More from the author